The Power of Online Reviews for Small Businesses

The Power of Online Reviews for Small Businesses As a small business owner, you may be wondering why online reviews...

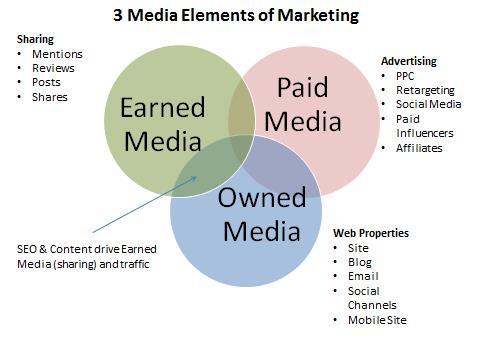

Read MoreThe Three Traffic Sources For Startups

The Three Traffic Sources For Startups I was recently re-reading Randall Stross’s book, The Launch Pad: Inside Y Combinator, Silicon...

Read MoreSEO: The Key to Sustainable Business Growth

SEO: The Key to Sustainable Business Growth Search Engine OptimizationIn today's digital landscape, businesses can no longer afford to underestimate...

Read MoreVermont Web Design

Springfield802.com Partners with Braveheart Digital Marketing to Enhance Online Presence Springfield802.com, the premier digital platform for Springfield, Vermont, today announced...

Read MoreAI In Digital Marketing

Mastering AI in Digital Marketing Today, the digital world changes fast, thanks to new tech. In this landscape, Artificial Intelligence...

Read MoreAI in Marketing Revolution

Navigating the AI in Marketing Revolution Welcome to the transformative world of Artificial Intelligence (AI), a force that's not only...

Read MoreNine Lessons from 2024 Social Media Benchmarks Report

2024 Social Media Benchmark Report In the dynamic world of social media, understanding the landscape is pivotal for crafting strategies...

Read MoreGuidelines for Writing SEO-Optimized Content

Guidelines for Writing SEO-Optimized Content Tired of your website languishing in search engine obscurity? Ready to see your content reach...

Read MoreMastering SEO in 2024: A Comprehensive Guide to the Four Pillars of Effective SEO Strategy

Mastering SEO in 2024: A Comprehensive Guide to the Four Pillars of Effective SEO Strategy SEO, or search engine optimization,...

Read More